The logo above is the property of Let’s Encrypt.

Introduction

If you’re unfamiliar, Let’s Encrypt allows you to register multiple domains and subdomains to get a valid SSL certificate (i.e., valid as in signed by a trusted third party Certificate Authority, CA) for encrypting your services. They also provide a utility for persistently maintaining the registration over time so that your certificates are always valid.

Q: Why not use self-signed certificates and be done with it? This is internal, right?

A: Yes, you can do that. And then because it’s not signed by a trusted third party CA, you can manually share and accept that key all over the place so your browsers will stop complaining, etc. And then when you go to configure your container registry or other services, you can disable security so that it will accept the certificate, etc. And if you ever decide to expose that service to the internet, you will likely want to swap up to trusted keys to keep from doing the above to your users.

Yes, sounds fun to me too. Or: we can do this with a legitimate certificate and really simplify things going forward.

Understanding the Problem

In order to get a valid SSL certificate, one generally needs the following:

- A valid external IP address.

- A valid top-level domain name (or one from DynDNS) that points to #1.

For Let’s Encrypt to work, you also need its registration process to come from #1 when it tries to access #2 on port 443 (HTTP over SSL).

So let’s say you have an internal domain name cannot be used externally because it’s not a valid top-level domain (a .com, .edu, etc.). However you do have a valid external domain (for your website) which points to our website host’s server address.

It’s a pretty typical configuration for a small business or individual, right?

Important: You will also need the ability to add subdomains to our DNS zone at that registrar in order to use what I am about to propose.

Solving the Problem

It turns out we can meet the above requirements very simply using LinuxServer.io’s linuxserver/letsencrypt Docker image and a simple NAT configuration. Therefore the steps, in order, to follow are:

- Add a subdomain (or multiple) to your DNS registrar that point back to an external IP address.

- Configure the firewall to direct port 443 from the external IP in #1 to your container/server that will be running the Let’s Encrypt service.

- Optional but Recommended: Configure a basic web service (e.g., nginx) and map port 80 at #2 to this service so you can verify your firewall configuration.

- Setup the container/server that runs the Let’s Encrypt service.

- Share the SSL certificates with the services that need them.

- Optional: Setup internal DNS aliasing.

These steps will result in only exposing the SSL maintenance server to the internet while keeping the service(s) using the certificate(s) internal to local domain.

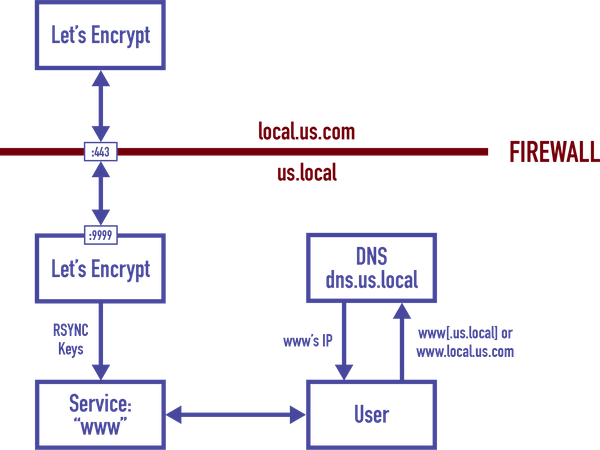

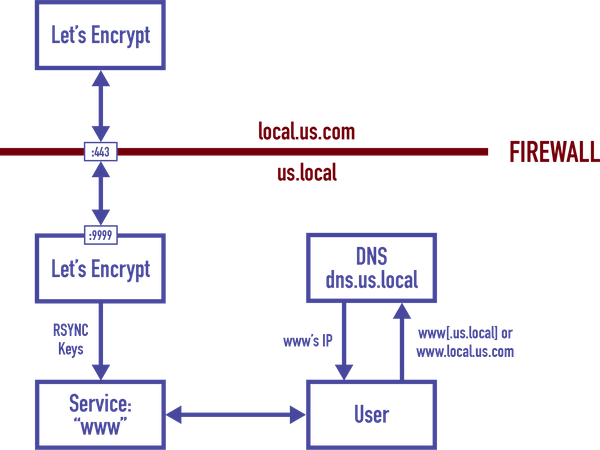

We’ll be referencing the domain names, etc. in this image as our example configuration.

Along the way we’ll discuss slight changes you can make to the steps and the impact of those decisions. Let’s get started!

1. DNS Registration

We cannot get into much detail here since the specifics of how you do this is dependent on your registrar. Some helpful key phrases you should look for are:

- DNS Zone

- Subdomain

- DNS Records

Once you’ve identified the area of your registrar where you can edit this information, what you need to add is an A Record for your subdomain. Set the record’s address to the external IP address where your SSL registration will originate (i.e., your server, not your website’s).

2. Firewall Redirection

Configuring Let’s Encrypt from within your firewalled network requires that the client challenges on the same IP address as the domain and subdomain(s) you are configuring. Naturally, this will vary based on the type of firewall you have. In the above example, a simple dynamic network address translation rule is used to redirect the external 443 request to the external IP(s) towards the internal service’s exposed port (forwarding 443 is required, 80 is optional in the end-game but required if you’re doing Step #3).

Important: If you have multiple IP addresses and you’re wanting to setup SSL certs for subdomains that point at different IPs, you will need to account for this in your firewall configuration to ensure that the associated certificate registration egresses on the same IP address as it ingresses! Or stated a different way: if your firewall is configured to take all internal traffic from a given subnet and pass it out of IP address A, but your DNS zone A record for a domain/sub domain is on external address B: you will need to ensure the registration traffic for that other domain/sub domain exits your network on address B. If it does not, registration with Let’s Encrypt will fail.

Important: If you have another internal service that needs to share 443, your firewall configuration needs to be much more selective about the inbound IP addresses that redirect to your Let’s Encrypt container so that all other addresses are routed to the other service(s) in your network.

Once your firewall is configured, you’re ready to either test it or skip forward to Let’s Encrypt.

3. The Test Server

To reiterate: the purpose of this step is to verify your Let’s Encrypt service is actually going to get touched from the internet during the registration process. If your configuration is wrong: you’re going to lock yourself out in time-out for potentially multiple hours so you can think about what you’ve done.

For this example, we’re using Docker on the internal server that is being mapped to by the firewall. Naturally we’re assuming that the server’s firewall is configured to allow port 80 and nothing else is using that port on the host.

$ echo "Hello :-)" > index.html

$ docker run \

--rm \

--net host \

-v $PWD:/usr/share/nginx/html:ro \

nginx

Now, try to use a web browser to access the subdomain name you added in Step #1. It should return to you that greeting.

When you’re finished testing, use CTRL+C to stop the nginx server (which will auto-remove itself, --rm).

Congratulations: you’re ready for Step #4 and can now close port 80 on your firewall if you would like.

4. Let’s Encrypt via Docker

There are numerous guides available for setting up Let’s Encrypt as a service in your host OS, however I used the Docker image from LinuxServer.io: linuxserver/letsencrypt so that the system exposed to the internet to get the keys is not the same one that uses them. The configuration was straight-forward as detailed in their README configuration.

First you’ll need an external space, or a docker volume, to cache the container’s configuration and resulting keys. If you are using an external file system location, make sure it is owned by a user and group that will be made available to the Let’s Encrypt service we’re about to create:

# mkdir -p /var/letsencrypt

# chown -r USER:GROUP /var/letsencrypt

Create a container that will run in privileged mode with the various environment variables configured as you need.

docker create \

--privileged \

--name=letsencrypt \

-v <path to data, e.g., /var/letsencrypt>:/config \

-e PGID=(id -g GROUP) -e PUID=(id -u USER) \

-e EMAIL=<email> \

-e URL=local.us.com \

-e SUBDOMAINS=www \

-p <preferred port>:443 \

-e TZ=<timezone> \

linuxserver/letsencrypt

The <preferred port> can be something other than 443 if you have another service using 443 on the host system. However, be sure to configure your firewall to forward the external 443 calls to this preferred port (per the diagram example, 9999).

Once you have configured your firewall (if necessary), run the docker container (docker run -d letsencrypt). View the log (docker logs letsencrypt) to verify registration has completed. After a short time, the log should show success:

Congratulations! Your certificate and chain have been saved at...

Sticking with this example, in /var/letsencrypt you will find several new paths, specifically of interest:

./etc/letsencrypt/live/local.us.com

The full and private PEMs are in that location, linked back to the staging area which is maintained by the container (which has to keep them up-to-date every couple months).

If the exposed volume path for those keys is not on the server hosting the other services where you plan to use them, you’ll need a way to keep them in sync with that server (or the others that need it). See Migrating the SSL Keys for more details on one option.

5. Migrating the SSL Keys

Under the Nice to Have column is a means to serve the keys over to the services that need them. One simple setup is an RSYNC over SSH cron job that pulls keys from one server to the other.

Special Note: Because it came with its own quirks, this example is going to provide details of how to accomplish these steps if you’re using a Synology DSM v6+.

DSM Users: Synology’s DSM on the NAS requires that remote SSH access can only be performed by users in the

admingroup, which is an unfortunate requirement since by default when setting up shared folders, the default permissions are full access for the group. So be vigilent in disabling access for all other users.

Once you’ve created that user and setup permissions to the letsencrypt path in a way that is appropriate, create an SSH key for that user at the destination server.

# ssh-keygen -t rsa

# # Set the name to something other than ~/.ssh/id_rsa if you already have a key.

# # Press Enter for the two pass phrase questions

# ssh-copy-id -i ~/.ssh/KEY_NAME.pub REMOTE_USER@REMOTE_SERVER

# eval `ssh-agent -s`

# ssh-add ~/.ssh/KEY_NAME

Warning: Since the key has no passphrase, limit whatever it’s user allowed to access, which in this case is your SSL cert.

DSM Users: The result of the

ssh-copy-idcommand will foul up the remote user’s home directory permissions, rendering SSH on the DSM inoperable for that user. You’ll need to fix the user’s permissions to 0755 for the home directory, 0700 for.ssh, and 0644 for.ssh/authorized_keys.

Test the above connection from the destination server to the server hosting the keys by connecting over ssh:

# ssh REMOTE_USER@REMOTE_SERVER

It should log straight into the server with no prompts.

If it does not, use -vvv to get a nice, verbose output. Verify your key is being passed. If after it is passed it continues on offering other challenges until it requests the password, double-check the permissions of the home directory (the special note for DSM users may apply to you too).

Next, at the destination server, we need to configure a cron job that runs periodically. We can do this with a simple bash script:

#!/bin/bash

rsync \

-e ssh \

-rLvz \

REMOTE_USER@REMOTE_SERVER:/LETS_ENCRYPT_VOLUME/etc/letsencrypt/live \

/etc/letsencrypt

# Set permissions

find /etc/letsencrypt -name "*.pem" -exec chmod 600 {} \;

The -Lr is to get the file from the link (since live contains links to staging) and recursively fetch directories. The -vz is verbose, compression.

DSM Users: Your

LETS_ENCRYPT_VOLUMEmust be a shared folder, which means it will be situated on one of your volumes (i.e.,/volumeN). The path forLETS_ENCRYPT_VOLUMEmust be prefixed with thatvolumeNreference since we’re using SSH with RSYNC.

Make the script executable (chmod a+x) and place it in the /etc/cron.XXXX of your choice (e.g., /etc/cron.monthly). For what it’s worth, Let’s Encrypt service is only going really need to update the certificate every couple of months, so hourly is probably unnecessary. 😉

6. Internal DNS Aliasing

The functional purpose of this step is to ensure the internal services using SSL get accessed using the valid, external subdomains you’ve registered for your SSL certificate. Moreover in this example, we’re not exposing those services to the internet. Therefore with this step, we’re internally forwarding the valid external FQDNs by creating a Forward Lookup Zone (FLZ) with A records that will, internally to the network, point those requests back at the internal services.

Recall: for this example, our internal domain is us.local, and local is not a valid top-level domain. Your new, external subdomain you just registered to work with the SSL certificate is local.us.com because you own us.com, and com is a valid top-level domain. You then added another A record for a sub- subdomain which you want to use for an internal HTTPS web service: www.local.us.com. And per the example, let’s say your web server’s internal host name actually is www (i.e., its internal FQDN is www.us.local). Another way to summarize this is that your current Forward Lookup Zone (FLZ) is us.local with an A record for www.

First, on your internal DNS server, create a new FLZ for local.us.com (i.e., the external new FQDN for your services that are a subdomain of your original FQDN). Then create an A record for www in that new FLZ that points to your internal web server’s IP address. Next, remove the A record for www from the us.local FLZ. Finally, replace it with a CNAME (alias) to the us.local FLZ called www, which redirects to the external FQDN wwww.local.us.com.

You may be wondering why not just leave the internal FLZ as is and simply add the external one. The point of the above is to ensure that anyone, out of habit or otherwise, who tries to navigate to just the hostname (http://www) will redirect the request to the new canonical name www.local.us.com, which is the valid FQDN for your SSL cert. Once you’ve followed through with the above, you can verify this behavior using nslookup www, for example.

Conclusion

If there is one take-away from all the above: you can deploy a valid SSL certificate for internal services, for free, thanks to Let’s Encrypt, LinuxServer.io, and a little bit of creative thinking.

Next time, we’ll talk about taking a self-hosted GitLab server and enabling HTTPS thanks to our SSL keys, and thus gaining a number of other great features in the process. Until then, enjoy your SSL keys.

If You Found This Helpful…

Please: consider donating to support these free services:

- Let’s Encrypt for this service

- LinuxServer.io for providing the excellent Docker image to use that service

- EFF (Electronic Frontier Foundation) on behalf of Let’s EncryptDisclosure: As with all of our articles and public training: nothing is sponsored content. Geon Technologies, LLC, is not affiliated with nor compensated by any of these services.