Introduction

Over the past couple of years we have showcased a few demos where we use Docker images and containers to stand up various capabilities, like utilizing the latest version of UHD for our Ettus Research USRP hardware like the B205mini USB3 transceiver. So recently, we decided to revamp the capability to make it even more flexible through a variety of changes aimed at using Docker Compose. For this (re-)introduction post however, we’ll be focused on building from our repository version which includes many helper scripts.

Note: The old repositories have now been renamed to

old-docker-redhawk, etc. in favor of these new images found all within our docker-redhawk repository (described here) and on our docker.io organization. Once built, the image names are all prefixed withgeontech/redhawk-.

The images produced from this build represent a slightly-modified RPM-based installation and include one for Geon’s fork of the REST-Python server (beta, 2.0.5 compatible). Because of this, the images are based on CentOS 7, however all of the development of these images and scripts took place on both CentOS 7 and Ubuntu 16.04. One person has already been using them in Windows with Docker-Compose.

Security Note: The repository’s scripts, Makefile, etc. all make the assumption that the user running them is allowed to call on the Docker daemon. A simple way to do this is add your user to the

dockergroup, however this can be a security risk. Use discretion.Update: 9 June 2017 Today we pushed out a slight nudge of the images. The versions are still 2.0.5, but the supervisord -based ones (i.e., most of the images) have all gained

startsecsas a field to help ensure the container does exit if the related command failed to start after some number of seconds. Additionally, a more thoroughly-tested version of REST-Python is now baked into thewebserverimage. If you’ve already built/downloaded the images, just re-pull them.

Building the Images

You could simply reference the geontech/redhawk-...:VERSION image in a compose file, for example, and the image will be downloaded to your system, pre-built. However if you would like to run through the whole build and get the helper scripts, follow these steps.

Note: The main difference is getting to skip an hour or two of compiling as well as how our process tags whatever version it built as both the version of REDHAWK and

latestto facilitate shorterruncommands and instance checking in the helper scripts. If you just want to use the pre-built images, manuallydocker pull ...each of interest and runmake helper_scripts.

The first step in building our images from the repository is to clone it:

git clone git://github.com/GeonTech/docker-redhawk

cd docker-redhawk

Next, decide what features interest you. We’ve provided base images for the following (again, prefix each name with geontech/redhawk-):

| Name | Description |

|---|---|

base |

Base dependencies for REDHAWK SDR including installation of a Yum repository reference. All other image names depend on this one. |

runtime |

All REDHAWK Runtime libraries pre-installed without any of the extras like a Domain definition, etc. It’s meant to be the basis of the Domain, various Device launchers, and the REST-Python webserver |

omniserver |

Runner — provides an instance of the OmniORB Naming and Event services. |

webserver |

Runner — provides an instance of the REST-Python server integrated with the specified OmniORB server address. |

domain |

Runner — provides a REDHAWK Domain instance |

gpp |

Runner — creates the node definition and starts a REDHAWK SDR GPP instance |

rtl2832u |

Runner — creates the node definition and starts an RTL2832U instance |

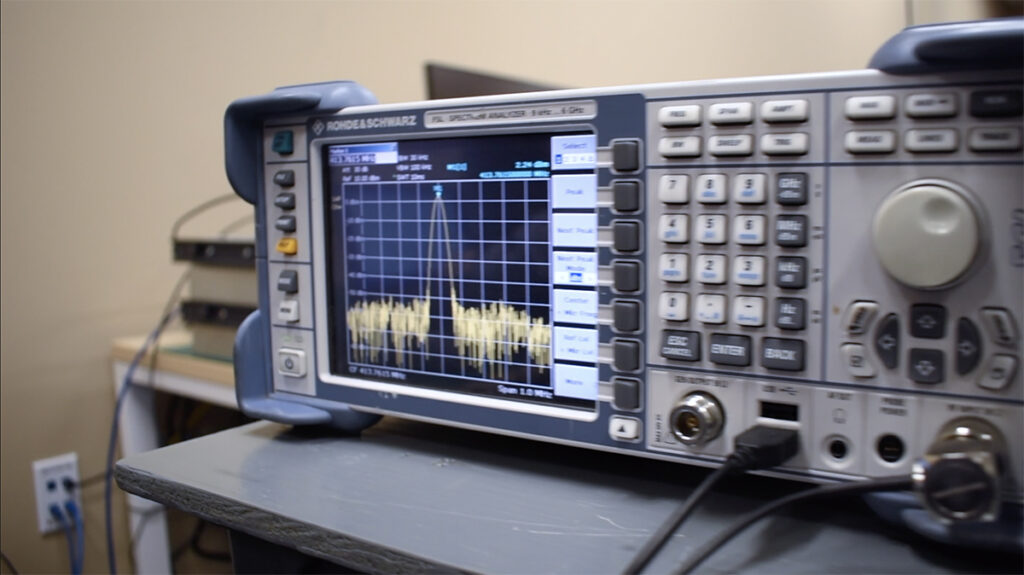

usrp |

Runner — creates the node definition and starts an USRP_UHD instance using UHD Version 3.10 (i.e., compatible with recent USRP devices such as the B205mini, etc.). |

bu353s4 |

Runner — creates the node definition and starts a BU353S4 instance. |

You can then make each as you would with other targets:

make geontech/redhawk-usrp

After a short time, you will have a new image tagged with the related REDHAWK version as well as latest. The latter tag is to simplify the portability of the helper scripts.

OmniORB Services

REDHAWK SDR requires the OmniORB naming and event services to provide its full capabilities, and Docker-REDHAWK is no different. So, you probably noticed that we have provided an omniserver image definition. This does not mean you’re required to run it to use Docker-REDHAWK.

One of our goals with this project was to make the containers able to be run either in a continuous integration -style setting or direct integration with a CentOS 7 -based REDHAWK SDR system. We’ve facilitated this by providing an environment variable and configuration script that update the internal /etc/omniORB.cfg file to point back to the specified OMNISERVICEIP. In this way, you can run a container of just the USRP image pointed back at your own OmniORB server address and Domain without having to run anything else for REDHAWK on your system.

Moreover, the helper scripts provided in the repository allow you to specify that option (-o or --omni). Doing so populates the OMNISERVICEIP variable for the spawned container, which in turn runs the auto-configuration script to populate that address into /etc/omniORB.cfg.

Helper Scripts

Building everything by simply running make with no arguments will result in linking several executable scripts into the docker-redhawk top-level directory. Alternatively, one could run make helper_scripts to create the various file system links.

Each linked script fits a consistent convention:

- No arguments? Status output as it pertains to that image (if applicable).

- Providing

-hor--helpprints a help message of all options with some examples. - Runner scripts:

script command [name] [options]. For example,gpp start MyGPPwill start a GPP Node named MyGPP with all other default arguments specified.

The scripts also have a common internal structure to facilitate transitioning from using the helper scripts to deploying systems with Docker Compose, etc. Each has a verbose setup/configuration section with command line options, flags, helpeful print-outs, etc. If the script is for a runner, that configuration section is followed by the actual docker run ... execution section that you can mimic in Docker Compose files. This is to simplify the transition from using the helper scripts to mock up a system to then implementing a Docker Compose -based deployment.

See the README.md for an extensive list of the helper scripts. We’ll be covering them in a series of blog posts and at least one demo video in the future. In this post, we’ll start with the basics.

Basic Examples

In this section, we’ll cover some of the basic things you can do to get started using the Helper Scripts.

Domain

Let’s stand up a Domain. The Domain needs the address to an OmniORB naming and event service. If you already have one on your network:

./domain start --omni OMNIORB_ADDRESS

If you do not have a server running, start one and the Domain:

./omniserver start

./domain start

Note: In this example, because there will be a container named

omniserverrunning, thedomainscript automatically detects the IP address and applies the arguments for you.

The result of either domain start run is a container named with the default domain name: REDHAWK_DEV. Think of this like running nodeBooter -D in the terminal or starting the Domain with the defaults from the IDE’s Target SDR.

The Domain image has the standard Waveforms and Components pre-installed. As long as you have a GPP connected to the Domain, you should be able to run any that can run in a standard REDHAWK system.

GPP

Perhaps you want to add your Docker host system to a REDHAWK Domain as a GPP Device. The related helper script is gpp:

./gpp start MyGPP

That command is of course assuming your host system is also running the OmniORB services and the default Domain, REDHAWK_DEV. If you are not, specify the --omni and --domain options.

The resulting container in any case will be named with the node name you provided, MyGPP in this case, suffixed with the Domain name to which it is attached. So the full container name in this example is: MyGPP-REDHAWK_DEV.

Development Environment

Another image included is development, which includes the REDHAWK IDE and a configuration script that tries to help you map your external user ID to the internal one running the IDE. The net effect is that you can mount an external directory location to serve as your workspace and have the files saved there, from the container, be editable by the host user.

Note: Running Eclipse (the IDE) from within a Docker container can be challenging. Presently the method used in the

rhidescript has been tested on CentOS 7 and Ubuntu 14.04. It’s possible a similar method can work for macOS users who have XQuartz installed, however this is not included in this release.Security Note: The script that modifies the internal user’s ID to map to the external user is a charming example of how providing Docker daemon access to certain users can be both a powerful utility and a security risk. Use discretion.

Two main scripts are provided to facilitate standing up the IDE as well as volumes for your work to persist: rhide and volume-manager, respectively.

REDHAWK IDE

The rhide script has two main functions. First, it can help you manage your SDRROOT and Workspace Docker Volumes by way of internally calling on the volume-manager script. Second, it runs the IDE in a container and maps the caller’s user ID to the internal user. To run the IDE however, you must specify both the SDRROOT and a workspace, though the later can be an absolute path on the host operating system.

First, we’ll create our two volumes:

./rhide create --sdrroot MyRoot --workspace MyWorkspace

Next, run the IDE (again, assuming omniserver is running locally):

./rhide run --sdrroot MyRoot --workspace MyWorkspace

The IDE should start and be ready to connect to your Domain(s).

If you have a Domain container running already, stop it (./domain stop ...). Then restart it with the --sdrroot option so that your IDE and Domain can now share the SDRROOT volume.

./domain start [YourDomainName] --sdrroot MyRoot

Now when you drag projects to the Target SDR, they’ll be installed into the MyRoot Docker Volume. And since the volume is separate from the IDE and Domain containers, its layer of contents will persist between runs of either container type.

Volume Manager

The volume-manager script has a few use cases as well.

Usage / Status

When provided no arguments, similar to the other scripts, it provides a status -like output indicating what types of volumes exist (again, using the label to sort them out) as well as which volumes are mounted to what Domain or IDE containers. This listing can also be filtered based on type (sdrroot vs. workspace):

./volume-manager sdrroot

Results in the following:

SDRROOT Volumes:

MyRoot

Domains:

MyRoot is not mounted.

Development/IDEs:

MyRoot is not mounted.

Creation

The natural second use case: it can be used to create simple Docker volumes that are labeled for use as either as an SDRROOT or IDE workspace (if you choose to go that route). For example:

./volume-manager create workspace MyWorkspace2

The above example would create MyWorkspace2 as a volume that you can mount to the IDE or share with others.

Conclusion

So that’s it then, an introduction to Geon’s Docker-REDHAWK. We hope you enjoy the rapid integration capabilites this provides, being able to deploy a Docker host as a binary-compatible contributor to a standard REDHAWK environment. And keep an eye out here on our website for future posts of how we’re using these images.